Deciphering the Dangers of Big Tech’s Dominance: A Dialogue with Safiya Noble

Despite near-dependance on technology to communicate, work, socialize, and consume, we don’t quite understand what our digital traces are. And given Big Tech’s global dominance and notorious reticence, their exploitation of these gaps poses a serious threat.

Despite near-dependance on technology to communicate, work, socialize, and consume, we don’t quite understand what our digital traces are. And given Big Tech’s global dominance and notorious reticence, their exploitation of these gaps poses a serious threat.

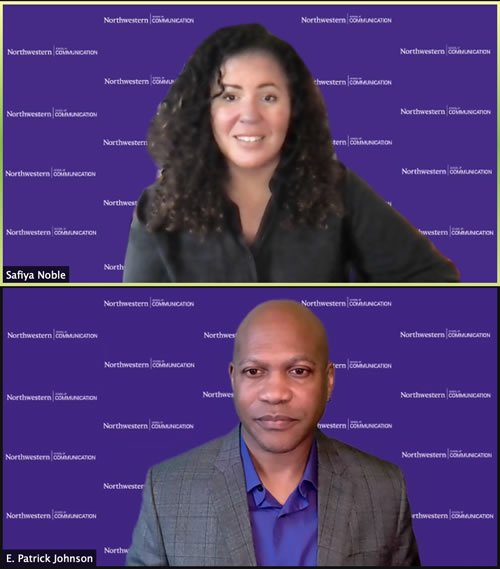

As much a warning as it was a call to action, Safiya Noble, scholar of technology bias, shared this and more about her research and advocacy during the ninety-minute April 22 Dialogue with the Dean event, hosted over Zoom by School of Communication Dean E. Patrick Johnson.

“Sometimes the most banal kinds of things are the things we should be paying attention to,” Noble said of internet searches.

Noble, an associate professor in the Department of Information Studies and the codirector of the Center for Critical Internet Inquiry at University of California, Los Angeles, is the author of Algorithms of Oppression: How Search Engines Reinforce Racism.

Her entrée into graduate school and her research area came after a 15-year stint working in corporate America. She began noticing how tech and related industries were talking more and more about the emerging dominance of algorithms—essentially the data-tracing codes that yield our internet search results and more—when Noble, a Fresno, Calif.-bred daughter of a white woman and a Black man, started to consider how this creeping technological control might be discriminatory. While her early work was rebuked by professors and peers, the missteps behind Facebook, Amazon, and Google have proved her right.

Google, in particular, has been in Noble’s sights due to its ubiquity and tendency to leave communities of color out of its code—or to present them in a way that’s inaccurate or offensive. Be it the oversexualization and fetishization of Black and Asian women that results from searches or the fact that more criminal background check ads surface if you have a “Black-sounding” name, bias and discrimination is not a problem that users can willfully ignore any longer.

“We should be listening to the people whose voices are in the margins; they tell us a lot about the world we live in,” she said. “And we should not wait until there are mountains of harm before we decide to do something about what’s happening.”

Yet it’s typically Black and brown researchers and LGBTQIA+ scholars and journalists who are sounding the alarms, she pointed out, and they’re bringing organization, art, and joy to the resistance. But the exhaustion and trauma that users experience from seeing continuous content of police violence, for instance, in their Facebook and Twitter feeds have led Noble and other scholars in her area to drastically reconsider their algorithmic footprints.

“I constantly try to encourage young people, my students, to think of knowledge as kind of like slow organic food: the acquisition of it, the making of it, the cultivation of it,” she said. Those quick results one sees in search for, say, insurance quotes might appear to be consistent, empirical data, but when two people of different races apply the same variables to the search, the same numbers are not generated.

“We don’t understand fully what our digital profile is that’s informing the kinds of things we find when we’re looking,” she said. “(Search engines are) aggregating us into different consumer groups that can be sold to customers, and those are the people who pay to attract our attention and our business so that process of constantly aggregating and desegregating us into different consumer groups is always at play and that’s very hard to you know…a lot of subjectivity a lot of play here.”

Big tech, she explained, is a lot like the tobacco industry. For decades, aggressive advertising and lobbying ensured a stranglehold on the market, which helped in tamping down any lawsuits or bad press that threatened their sales—until the evidence of tobacco’s danger became impossible to refute. Silicon Valley’s deep pockets and propaganda now operate similarly, and we don’t yet know the harm that this unregulated industry is levying on its users—but we got a real-time taste earlier in the year when the fate of our democracy was at stake.

Johnson asked Noble how the School of Communication ought to consider Big Tech, both in terms of leverage and critique, and she said it was a serious issue in the scholarly community, as so much research is funded by these companies and they may get to a point where they can cherry-pick their favorable researchers. Additionally, university leaders need to coalesce to pressure government to get Big Tech to pay fair taxes and put money back into the communities they’ve devastated.

“There’s still a lot of work to be done, because ultimately you knew, even if we resolve some of these misrepresentations of people in Google, there are these broader issues about the level of surveillance, the level of information, and knowledge that that this company holds about the public,” she said.

It’s that knowledge that the most affected need to take back.

Noble and Johnson’s conversation concluded the 2020-21 Dialogue with the Dean series. The inaugural year’s guests were John L. Jackson, Jr., dean of the Annenberg School for Communication at the University of Pennsylvania, and Ruha Benjamin, professor of African American Studies at Princeton University. The series engages emerging and established artists and scholars in conversations about social justice, politics, media, communication, and culture.